OOI Data Center Progress Report

Craig Risien, project manager for the U.S. National Science Foundation Ocean Observatories Initiative (OOI) Data Center, likens the role of the OOI Data Center to that of Gmail or Google Drive. “When people log into Gmail, it just works. They don’t think about servers, networking, backing up and whatever else behind the scenes is required to allow you to just log into your Google environment and send emails, create documents, or move or download files. Typically, people don’t think about that back-end infrastructure. That’s the type of user experience we are looking to achieve when we create data services such as the OOI JupyterHub”. After two years at the helm of helping plan and build the new OOI 2.5 Data Center at the Oregon State University, Risien says the Center is functioning well and users are starting to take advantage of OOI provided infrastructure such as the OOI JupyterHub for research and teaching.

Oregon State University (OSU) was awarded the contract to run OOI’s Data Center starting in October 2020. In three and a half years, the Data Center team built two data centers. To accomplish this, they migrated two data centers, the original OOI Data Center at Rutgers University to OSU, and then the OOI 2.0 Data Center to the new OOI 2.5 Data Center. The data team at OSU accomplished all of this with virtually no downtime for both data center migrations.

“We’ve had less than 90 minutes of total downtime, when we transferred data to the current servers at OSU,” explained Risien. “And the majority of that downtime wasn’t because of hardware issues or moving data, we actually took the opportunity presented with these kinds of migrations to improve the system and make it work better for the next five years.”

The system downtime was associated with hardware migrations, when the team had to switch over old firewalls to new firewalls. This switch required physically unplugging fiber cables from off old firewalls and shifting them over the new ones. This switch was made early in the morning so there was no significant impact on the system availability. Risien laughed and said, “I think the total downtime there was about 20 minutes.”

The team used the limited downtime when data were being migrated to a new storage cluster to disaggregate development software environments and to change the network design.

“We seized on the transfer to a new storage cluster to put network segmentation in place, in other words we segment our network into different streams for different applications,” added Risien. “This improves our security posture because it makes it harder for somebody to move laterally through the system. It also allows for optimizing network performance and provides us with greater control over network traffic.”

Cybersecurity improvements

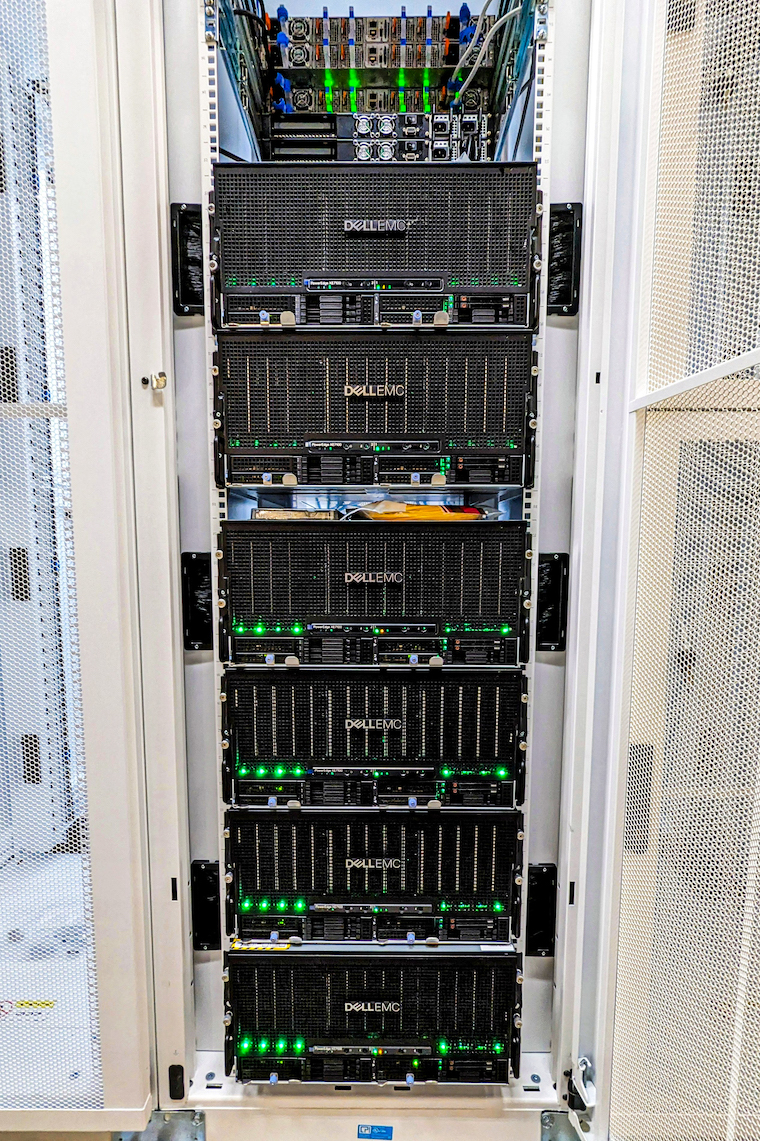

These successful data migrations are only the tip of the iceberg of what OSU’s data team has achieved over these past few years. Working with Dell, which provides the cyberinfrastructure hardware, the team shifted from a tape to disc-based backup solution. In addition to an existing tape backup library at the Texas Advanced Computer Center, OOI’s data are now routinely backed up to a geographically remote storage cluster in Bend, Oregon. “Backing up OOI data on disk in Bend is important because we now have an immutable copy of OOI data in a remote location. This means we could recover from a disaster much faster than with data stored on tape. Disc recovery would take days to weeks, as opposed to possibly many months from data stored on tape.”

Other cybersecurity improvements have been made over the last few years. The Data Center now has a storage solution – a Dell Data Domain – that provides an “air-gapped” backup of virtual machines that do the system monitoring, computation, and data delivery to end users.

During the third week of March 2024, a copy of all raw data and virtual machine backups to the storage cluster in Bend was completed. The transfer of 1.8 PB of data took about 10 days to complete with transfer rates at times exceeding 60 Gb/s, which is about 20 times faster than the transfer speeds a person will typically see when copying data to an external solid state hard drive.

“Obviously scientific data are critical in a recovery situation, but so are the virtual machines that process the data,” added Risien. “This air gapped backup of our virtual machines would allow us to recover far faster in a disaster recovery situation. We take security, backing up of data and our virtual machines very seriously. About third of our budget was spent on the systems that create these air gapped immutable backups of our data and virtual machines to make sure OOI data are secure and retrievable, if needed.”

A massive data transfer

Risien attributed the success of seamlessly transferring vast amounts of data and hundreds of virtual machines to the improved network speeds and technologies involved. One example he gave was the compute cluster, which consists of 16 VxRail appliances that run the virtual machines. “Using VMware VMotion we were able to move hundreds of running virtual machines from our OOI 2.0 VxRail clusters to the OOI 2.5 cluster, with no downtime or service disruptions, unbeknownst to our program partners or external users, which I find remarkable.”

What else is remarkable is that this data transfer and security improvements were accomplished in a seamless way by a very small team. In addition to Risien, Anthony Koppers serves as Cyberinfrastructure Principal Investigator (PI), Jim Housell as the IT Architect, Casey Dinsmore as the DevOps Engineer, and Pei Kupperman, as the Finance Lead. “Our small team was able to move this massive amount of data, securely without downtime, in part because of a really productive partnership with Dell, which brought a complete solution to the table. And we’ve worked with other great partners at the Cascade Divide Data Center, and the OSU networking team, without whom we wouldn’t have been able to make the connection out to Bend. Good partnerships, and great people coming together was what made this all happen.”

.