Posts Tagged ‘Data’

Near Real-time CTD Data from Irminger 8 Cruise (August 2021)

In August 2021, members of the OOI team aboard the R/V Neil Armstrong for the eighth turn of the Global Irminger Sea Array and members of OSNAP (Overturning in the Subpolar North Atlantic Program) onshore are making near-real time shipboard CTD data available.

Onshore expert hydrographer, Leah McRaven (PO WHOI) from the US OSNAP team, is working with the shipboard team to support collection of an optimized hydrographic data product. A special feature of this collaboration is the near real-time sharing of OOI shipboard CTD data with the public. Interested parties will have access to the same CTD profiles that McRaven will be reviewing.

McRaven is sharing her reports here while the cruise is underway:

BLOGPOST #4 (September 13, 2021)

Another OOI cruise is in the books! Now that things have wrapped up and I’ve had a chance to dig into the data a bit more thoroughly, how did we do? In my previous post I reported that the Irminger 8 CTD data looked to be very promising, but I like to include one more step before recommending data to be used for science: carefully considering salinity bottle data.

Salinity bottle data can be used in many ways to support a particular scientific objective or research question. The two that I’ve become most familiar with are 1) to support the analysis of additional bottle samples (e.g. dissolved oxygen) and 2) to provide an additional assessment and calibration of the CTD conductivity sensors. Both applications are necessary when researchers require salinity values more accurate than what CTD sensors are able to provide. However, even if this is not required, it can help ensure that users receive data that are reasonably within manufacturer specifications.

I find it easiest to consider the GO-SHIP approach to bottle data first. Using ship-based hydrography, GO-SHIP provides approximately decadal resolution of the changes in inventories of heat, freshwater, carbon, oxygen, nutrients, and transient tracers, covering the ocean basins with global measurements of the highest required accuracy to detect these changes. For a program like this, 36 salinity samples are taken every CTD station in order to provide an extremely accurate and precise calibration for the CTD sensors. Interestingly, the Irminger OOI array is bracketed by three GO-SHIP repeat transects. While GO-SHIP provides invaluable measurements, drawing a large number of samples can be expensive and time consuming. Additionally, measurements occur on a decadal timescale, so there is a lot of the picture we miss.

One of the research programs that aims to provide a higher temporal and special resolution picture of the North Atlantic is OSNAP. This program has several scientific objectives, but generally aims to quantify intra-seasonal to interannual variability of the Atlantic Meridional Overturning Circulation(AMOC) in the subpolar Atlantic. This includes a focus on heat and freshwater fluxes, pathways of currents throughout the region, and air-sea interaction, all of which require highly calibrated data products. In order to accomplish this, PIs from the program need to be able to consistently merge their shipboard and moored data products for cohesive and accurate quantification of parameters. Because much of the variability being studied is so large, researchers do not necessarily need salinity accuracies at the level of GO-SHIP, but they do need to use salinity bottle samples to ensure that CTD casts are at the very least within manufacturer specifications.

In the end, no one approach to hydrographic sampling is necessarily better than another. What is important is the delicate balance of resources while at sea that best support the scientific objectives. For both OSNAP and OOI, where the primary work at sea is focused on servicing moorings, the resources for a GO-SHIP approach to sample bottle collection is simply not feasible. However, one very key feature of the OOI CTD data is that they are collected annually, while ONSAP data are collected every two years, and GO-SHIP data are collected every ten years. Hence, OOI is able to fill in some of the temporal data gaps in the region and greatly bolster many of the international programs working in the region.

This year OOI collaborated with numerous PIs and representatives from research programs that operate in the Irminger Sea region to produce a more optimized CTD and bottle sampling strategy that better complements goals similar to GO-SHIP, as well as several additional objectives from international programs, such as OSNAP. The goal of this updated plan was to provide OOI data end users and collaborators with data that are more appropriate for CTD, mooring, glider, and float instrumentation calibration purposes. In particular, the update included increased sampling of the deep ocean. Such data are critical in the Irminger Sea region due to the uniquely large variability of temperature, salinity, and chemical properties throughout the shallow and intermediate depths of the water column. Deeper CTD and bottle data will allow all end users to more carefully reference their scientific findings to more stable water masses and allow for better intercomparison with other available datasets, such as the available GO-SHIP and OSNAP data from the region.

The majority of methods that I use when considering salinity bottle data have been adapted from GO-SHIP and NOAA/PMEL. In particular, many of the cruises I work with, including OOI, often have far fewer bottle samples than recommended by GO-SHIP or PMEL methods. This isn’t necessarily bad, since we don’t need to achieve the same goals as those programs, however, great care in adapting methods does need to be considered (and I encourage you to reach out if this is something you have an interest in). So, with the improved OOI sampling scheme, what are the potential benefits to CTD data quality?

More strategically planned salinity bottle sample collection allows users to:

- Decide if data from primary or secondary sensors are more physically consistent

- Identify times when CTD contamination was not obvious

- Assess manufacturer sensor calibrations

- Potentially provide a post-cruise calibration

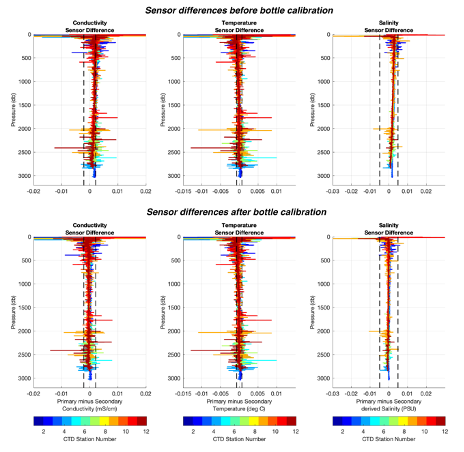

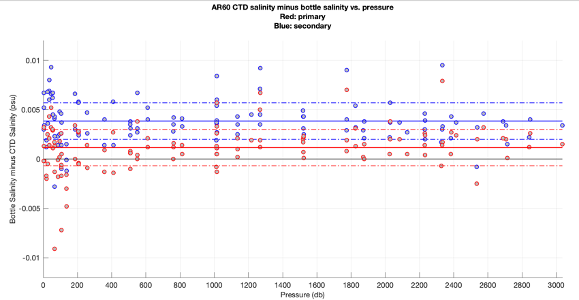

In the case of the Irminger 8 cruise, I see that all four uses of salinity bottle data are possible, which will make a lot of collaborators very happy! Starting with Figure 1, we can see a summary of CTD and bottle sample salinity differences as a function of pressure for both the primary and secondary sensors. As a rule of thumb, the average offset of these differences can be considered an estimate of sensor accuracy, and the spread, or standard deviation, can be considered an estimate of sensor precision. While the data have a fairly large spread to the eye, the standard deviations (indicated by the dashed lines) are placing the spread for each sensor within what we expect from the manufacturer precision. The striking result from this figure is that before using the bottle data to further calibrate the data, we see that the primary sensor had a higher accuracy than the secondary sensor. In going back to the Seabird Electronics calibration reports for the primary and secondary sensors (available via the OOI website), I noted that the calibrations for each sensor was a bit older than what we normally work with (last performed in May 2019). Additionally, the secondary sensor had a larger correction at its time of manufacturer calibration than the primary. This is corroborated by the differing sensor accuracies as determined by the bottle data. Lastly, while there are a few spurious differences shown, on average there doesn’t look to be any CTD and bottle differences due to factors other than expected calibration drifts.

Figure 1

In order to apply a calibration based on the bottle data to the CTD data, I first QC’d the bottle data and then followed methods described in the GO-SHIP manual. There are several sources of error that can contribute to incorrect salinity bottle values, ranging from poor sample collection technique to an accidental salt crystal dropping into a sample just before being run on a salinometer. This is why all methods of CTD calibration using bottle data stress the importance of using many bottle values in a statistical grouping. However, sometimes there are “fly-away” values that are so far gone they don’t contribute meaningful information to the statistics, and in those cases I simply disregard those values. As a reference, for the Irminger 8 cruise I threw out 9 of the ~125 salinity samples collected before proceeding with calibration methods. Note that within the methods described in the above documentation, systematics approaches are used to further control for outlier or “bad” bottle values.

Figure 2

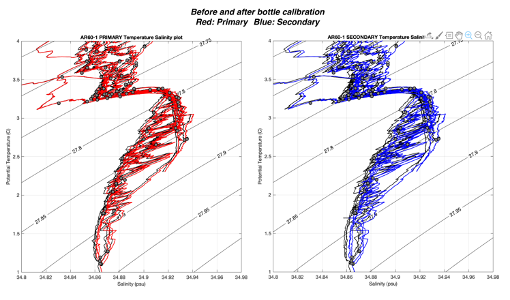

Since the majority of CTD stations for OOI are performed close to one another (and consequently in similar water masses), I grouped all stations together to characterize sensor errors. The resulting fits produced primary and secondary sensor calibrations that allow for more meaningful comparison of data with other programs. Figure 2 shows how primary and secondary data compare before and after bottle calibrations have been applied. Post calibration, primary and secondary sensors now agree more closely in terms of their differences. Similarly, Figure 3 summarizes data before and after calibration in temperature-salinity space, providing visual context for the magnitude of bottle calibration. Many folks working with CTD data would say that this is a rather small adjustment!

Figure 3

Figure 4

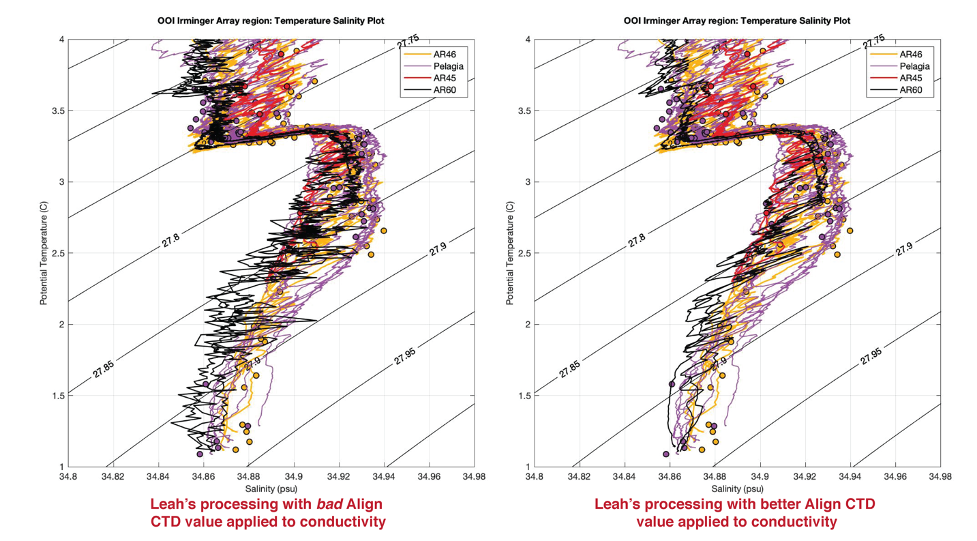

However, Figure 4 shows a comparison of the bottle-calibrated OOI data with nearby OSNAP CTD profiles from 2020. The results here are extremely important as OSNAP currently has moorings deployed near the OOI array and the OOI CTD profiles provide a midway calibration point for the moored instrumentation that is currently deployed for two years. These midway calibration CTD casts are critical in providing information on moored sensor drift and biofouling in a region where there has been a slow freshening of deep water (colder than 2.5 ºC) throughout the duration of these programs. Quantifying the rate of freshening is one of the objectives that OSNAP focuses on, but it is nearly impossible without high-quality CTD data for comparison. Figure 4 demonstrates that the freshening trend has continued from 200 to 2021 and that the bottle-calibrated OOI CTD data will be critical for interpretation of moored data.

However, Figure 4 shows a comparison of the bottle-calibrated OOI data with nearby OSNAP CTD profiles from 2020. The results here are extremely important as OSNAP currently has moorings deployed near the OOI array and the OOI CTD profiles provide a midway calibration point for the moored instrumentation that is currently deployed for two years. These midway calibration CTD casts are critical in providing information on moored sensor drift and biofouling in a region where there has been a slow freshening of deep water (colder than 2.5 ºC) throughout the duration of these programs. Quantifying the rate of freshening is one of the objectives that OSNAP focuses on, but it is nearly impossible without high-quality CTD data for comparison. Figure 4 demonstrates that the freshening trend has continued from 200 to 2021 and that the bottle-calibrated OOI CTD data will be critical for interpretation of moored data.

Finally, for those interested in the salinity-calibrated CTD dataset, please contact lmcraven@whoi.edu. A more detailed summary of the calibration applied can be found in my CTD calibration report here.

BLOGPOST #3 (August 18, 2021)

Irminger 8 science operations are now fully underway, which means the stream of CTD data is coming in hot (actually the ocean temps are very cold)! So far, CTD stations 4 through 11 correspond to work performed near the Irminger OOI array location. I spent the weekend and past couple of days paying close attention to these initial stations. Here’s an update on how things look so far.

One of the concerns this year is that the R/V Neil Armstrong is using a new CTD unit and sensor suite (new to the ship, not purchased new). Any time a ship’s instrumentation setup changes, it’s a very good idea to keep a close eye on things as changes naturally mean there’s more room for human error. What better way to talk about this than to share my own mistakes in a public blog! When I first downloaded and processed the OOI Irmginer 8 (AR60) CTD data from near the Irminger OOI array location, I became very worried…

When I compared the Irminger 8 CTD data with three cruises from the same location last year, I was seeing very confusing and unphysical data in my plots. I was using Seabird CTD processing routines in the “SBE Data Processing” software (see https://www.seabird.com/software) that I had used for previous OOI Irminger cruises as a preliminary set of scripts, so I was confident that something strange with the CTD was going on. I immediately pinged folks on the ship to ask if there was anything that they could tell was strange on their side of things. Keep in mind, this OOI cruise focuses more on mooring work with only a handful of CTDs to support all additional hydrographic work, so any time there is a potential issue with the CTD data we want to address it as soon as possible. I started digging into things a bit more, and realized that I had made a mistake.

Within the SBE processing routines, there is a module called “Align CTD”. As stated in the software manual: “Align CTD aligns parameter data in time, relative to pressure. This ensures that calculations of salinity, dissolved oxygen concentration, and other parameters are made using measurements from the same parcel of water. Typically, Align CTD is used to align temperature, conductivity, and oxygen measurements relative to pressure.” When working in areas where temperature and salinity change rapidly with pressure (depth), this module can be very important (and I encourage you to read through its documentation). As you’ll see below, the OOI Irmginer Sea Array region can see some extremely impressive temperature and salinity gradients. This has to do with the introduction of very cold and very fresh waters from near the coast of Greenland, together with the complect oceanic circulation dynamics of the region. Based on data collected on the old CTD installed on the Armstrong, I had determined that advancing conductivity by 0.5 seconds produced a more physically meaningful trace of calculated salinity.

While 0.5 seconds doesn’t seem large, it’s important to remember that most shipboard CTD packages are lowered at the SBE-recommended speed of 1 meter/second. Depending on how suddenly properties change as the CTD is lowered through the water, this magnitude of adjustment may seriously mess things up if it’s not the correct adjustment. In the case of the CTD system currently installed on the Armstrong, I’m finding that very little adjustment to conductivity is needed. There are many reasons as to why this value will change – from CTD to CTD, cruise to cruise, and even throughout a long cruise. The major factor is the speed at which water flows through the CTD plumbing and sensors and how far the pressure, temperature, and conductivity sensors are from each other in the plumbed line. Water flow is controlled by many things including CTD pump performance, contamination in the CTD plumbing, kinks in the CTD pluming lines, etc. (for more information, start here: https://www.go-ship.org/Manual/McTaggart_et_al_CTD.pdf and here: file:///Users/leah/Downloads/manual-Seassoft_DataProcessing_7.26.8-3.pdf. Note that the SBE data processing manual provides great tips on how to choose values for the Align CTD module.

Below is a figure that summarizes impact on my processed data before and after my mistake. This is a fun figure as it compares CTD data from four cruises that all completed CTDs near the OOI site: the 2020 OSNAP Cape Farewell cruise (AR45), the 2020 OOI Irminger 7 cruise (AR46), a 2020 cruise on R/V Pelagia from the Netherlands Institute for Sea Research, and stations 5-7 of the 2021 OOI Irminger 8 cruise (AR60). I’m plotting the data in what is called temperature-salinity space. This allows scientists to consider water properties while being mindful of ocean density, which as mentioned in the last post, should always increase with depth. I include contours indicating temperature and salinity values that correspond to lines of constant density (in this case I am using potential density referenced to the surface). For data to be physically consistent, we expect that the CTD traces never loop back across any of the density contours. These figures are also incredibly useful as the previous three cruises in the region give us some understanding of what to expect from repeated measurements near the Irminger OOI array.

The plot on the left shows the data processed with a conductivity advance of 0.5 sec. As you can see, the CTD traces appear much noisier than the other datasets, and contain many crossings of the density contours (i.e., density inversions). The plot on the right shows data that are smoother and less problematic in terms of density. You may also note that in the right plot, the AR60 traces are a bit shifted to the left in salinity (i.e. fresher or lower salinity values) when compared to the other datasets. This is because I am plotting bottle-calibrated CTD data from the other three cruises. Just as I type this, I’ve been given word that the shipboard hydrographer has begun analyzing salinity water sample data for Irminger 8. These bottle data are critical for applying a final adjustment to CTD salinity data and I’ll talk more about this in a future post.

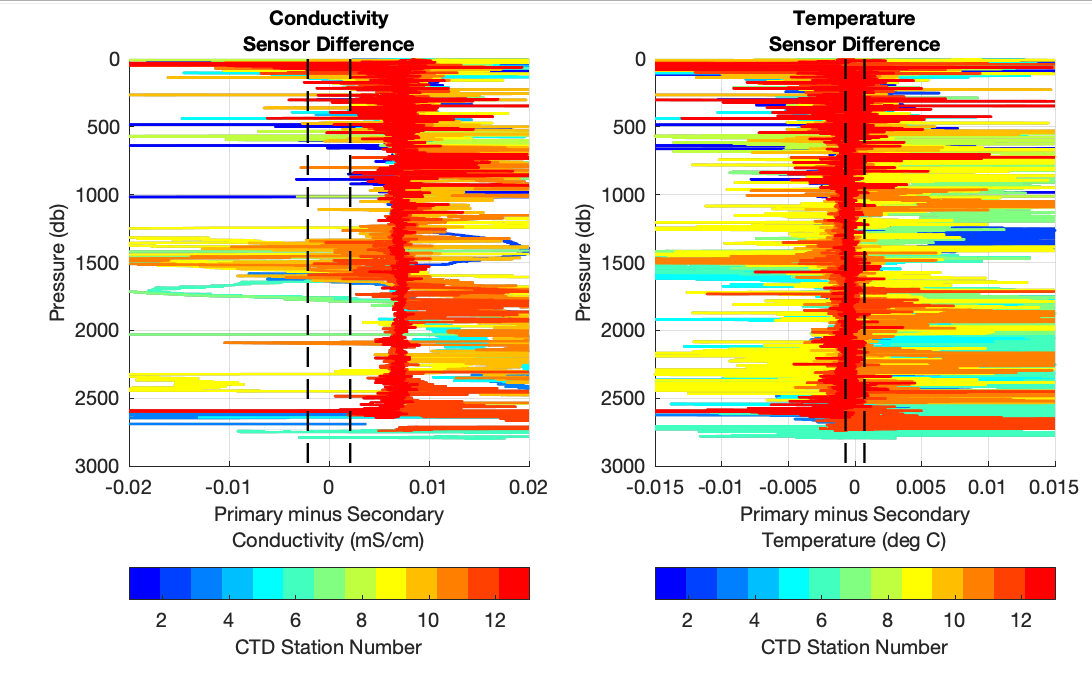

For now, I’m happy to report that the data look physically consistent! For completeness, I include the core CTD parameter difference plots from stations 4 through 11 (CTDs completed near the array thus far). CTD difference plots are described in my previous post. All checks out from where I am sitting so far. Thanks to the CTD watch standers and shipboard technicians for working so hard and taking good care of the system while the cruise is underway!

BLOGPOST #2 (August 10, 2021)

For this post I’d like to introduce some of the tools that folks can use to identify CTD issues and sensor health while at sea. Most of what I’ll be discussing here is specific to the SBE911 system that is commonly used on UNOLS vessels; however, a lot of these topics are relevant to other types of profiling CTD systems.

There are several end case users of CTD data within science. These include people who perform CTDs along a track and complete what we call a hydrographic section (useful in studying ocean currents and water masses); those who perform CTDs at the same location year after year to look for changes; people who use CTDs to calibrate instrumentation on other platforms (moorings, gliders, AUVs, etc.); and those who use the CTD to collect seawater for laboratory analysis (collected samples can be used to further analyze physical, chemical, biological, and even geological properties!). For each of these CTD uses, a core set of CTD parameters are needed.

Core CTD parameters include pressure, temperature, and conductivity. Conductivity is used together with pressure and temperature measurements to derive salinity. These three variables are needed to give users the critical information of depth and density in which water samples are collected, and support the calculation of additional variables. For example, ocean pressure, temperature, and salinity together with a voltage from an oxygen sensor are needed to derive a value for dissolved oxygen. In addition to core CTD parameters, it is very common to add dissolved oxygen, fluorescence, turbidity, and photosynthetically active radiation (PAR) sensors to a shipboard CTD unit. Each of these additional measurements have errors that must be propagated from the core CTD measurements – creating a rather complex system to navigate when trying to understand the final accuracy of a given measurement.

For each CTD data application, varying degrees of accuracy are needed from the measured CTD parameters to accomplish the scientific objective at hand… And this is where a lot of folks get into trouble! For a first example, consider someone who would like to calibrate a nitrate sensor that is deployed for a year on a mooring using water samples collected from the CTD. For a second example, consider someone who is interested in the changing dissolved oxygen content of deep Atlantic Meridional Overturning Circulation waters. In both cases, the core CTD parameters are critical. In the first example, this person needs to know the pressure and ocean density at the exact location their water samples are collected during a CTD cast so they can correctly associate analyzed water sample values with the correct position of the sensor on the mooring. However, in the second example, this person may need to use both salinity and oxygen samples to improve the accuracy and precision of CTD measurements so that their final data product will be sensitive enough to resolve small, but potentially critical, changes in the ocean.The most important take away here (CTD soapbox moment!) is that even if end users are not specifically interested in studying physical oceanographic parameters, they still need tools to verify that 1) the core CTD measurements are of high enough quality for use in their application and 2) that there are no unnecessary errors from the core measurements that are impacting their ability to address their scientific objective.

This is the first reason why I heavily encourage all CTD end users to become familiar not only with the accuracy of their particular measured parameter, but also the core CTD parameters. The second reason is that core CTD parameters are particularly useful in diagnosing early warning signs of CTD problems. Most shipboard systems install primary and secondary temperature and conductivity sensors on their CTDs, which provide an opportunity for in-situ sensor comparison. Additionally, calculated seawater density is particularly useful as it is one of the few properties we can make a strong assumption about – it should always increase with pressure. The density of seawater is determined by pressure, temperature, and salinity (conductivity), hence any time one or some of these recorded values is suspect, non-physical density “inversions” or “noise” may appear in the data record.

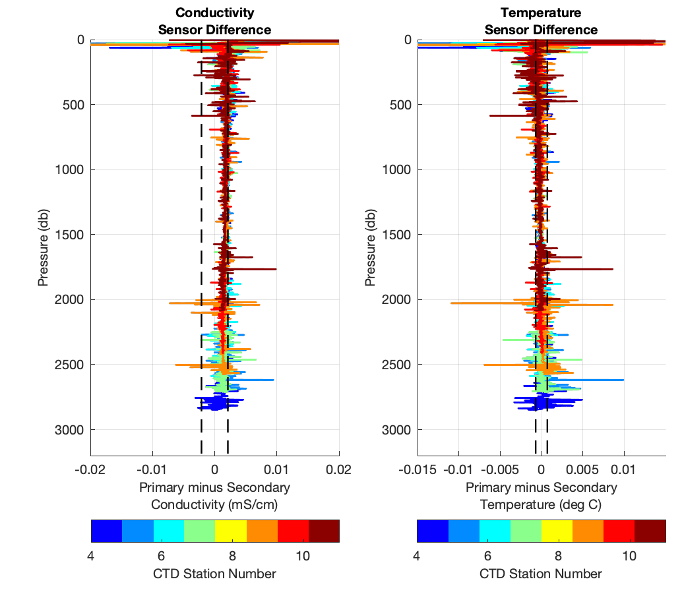

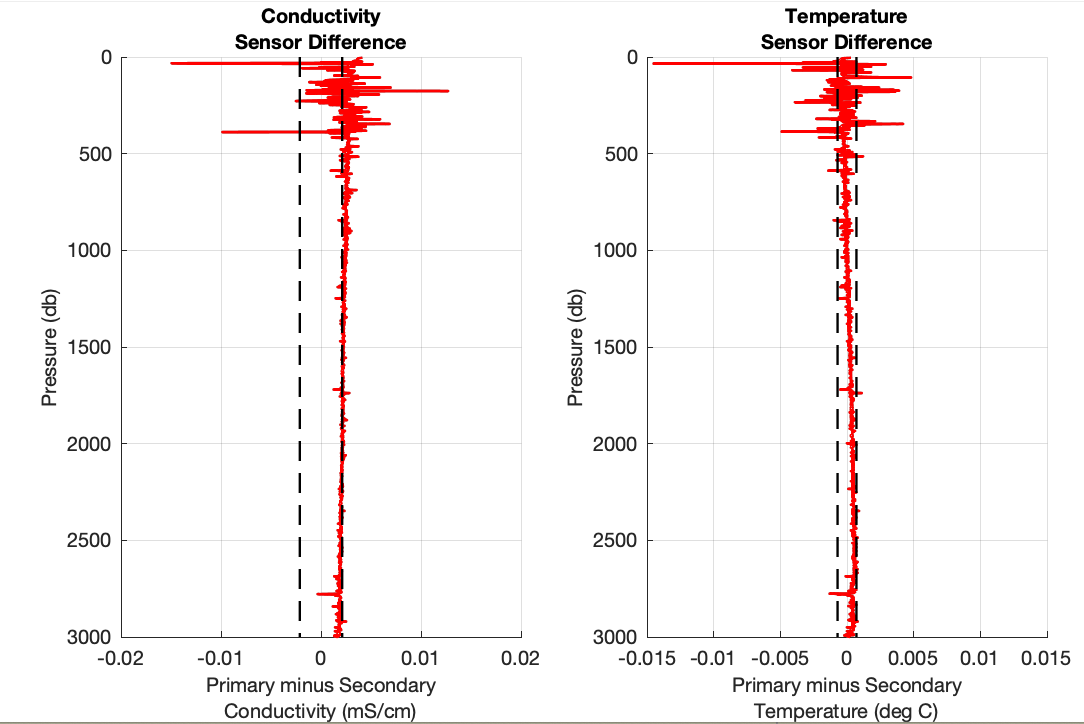

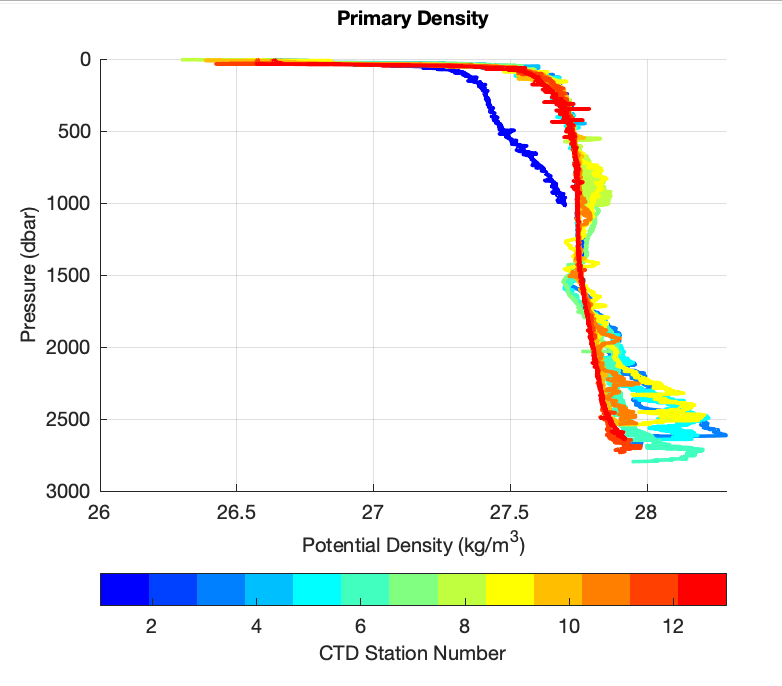

Below are two figures that can be very helpful in diagnosing CTD problems. In these examples, I am using the Irminger 8 (AR60-01) deep test cast, which took place late Sunday evening, August 8th. Figure 1 shows difference plots of the two sensor pairs (temperature and conductivity). Each panel includes vertical dashed lines indicating expected manufacturer agreement ranges (see sensor specification sheets datasheet-04-Jun15.pdf and datasheet-03plus-May15.pdf). The values shown are, ±(2 x 0.001 ºC) and ±(2 x 0.003 mS/cm) for temperature and conductivity sensors, respectively (note that 0.003 mS/cm is close to 0.003 psu for reasonable temperature ranges). In general, sensor differences should fall within, or very close to, this range when calibrated by the manufacturer within the past year. The rule can be relaxed in the upper water column, however, differences between sensors deeper than approximately 500 m that consistently fall outside of this range indicate problematic sensor drift or contamination. Figure 2 shows the calculated seawater density profile using the primary sensors. Consistent density inversions larger than ~0.1 kg/m3 also indicate problematic sensor drift or contamination. When creating such figures, always look at the downcast and upcast (skipped here for the sake of brevity). The upcast will look a bit worse than the downcast (I encourage you to read about why), but those data are extremely important to anyone collecting water samples!

Figure 1

Figure 2

So, what can these plots tell us about the CTD system implemented on the Irminger 8 cruise so far? Figure 1 demonstrates an overall acceptable level of agreement between the sensor pairs. The particular sensors in use right now have calibrations older than one year, so this level of agreement is actually quite good. Figure 2 is also rather promising in showing a density profile that is continuously increasing. If you’re being picky (like me), you may notice that there are some small density inversions between roughly 200-500 m. After taking a closer look, I noted that the salinity profile indicates that there are some rather impressive salinity intrusions evident in the upper 500 meters (I encourage you to download data from cast 2 and verify!). This is normal for the Labrador Sea region where the cast took place (lots of melting ice nearby) and will naturally create a bit more “noise” in these plots. So, I’m not very concerned by this.

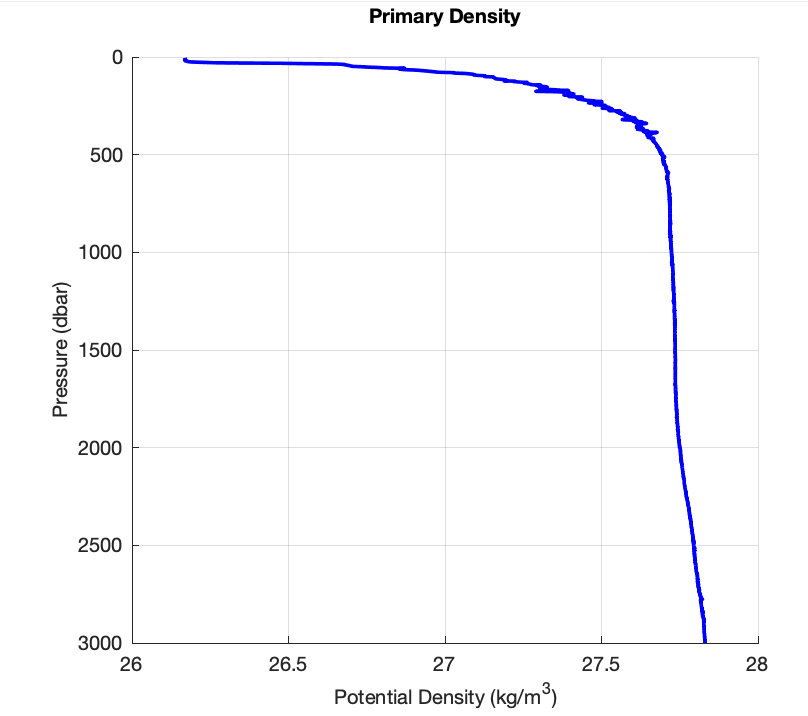

Now what do these plots look like when there’s a problem? There unfortunately isn’t one simple answer for this (I’ve been doing this for over ten years and am still learning subtle ways CTDs show problems!), but I’ll share two examples of when something was clearly wrong. The first example is from the Irminger 7 cruise (AR35-05). Figure 3 and Figure 4 show our two plots for stations 1-13 of the Irminger 7 cruise. Figure 3 shows a suspiciously large offset (well outside of the general threshold we expect in the conductivity differences) and incredibly noisy differences in both conductivity and temperature. Similarly, Figure 5 shows consistent and large density inversions for some of these casts. Several of the casts shown in Figures 4 and 5 were so bad that there are no usable profiles as far as scientific objectives are concerned. Luckily, however, there were a few casts in the set that could be corrected with water sample data (I’ll talk more about this later). Data loss is something that does happen while at sea, and the Irminger Sea in particular is an incredibly harsh environment to work in. However, if folks are diligent in creating these plots while at sea, the hope is that we can minimize time and data loss while striving for the highest quality data possible.

Figure 3

Figure 4

For my last example, I provide a quick reference guide for how core CTD parameter issues may look on a Seabird CTD Real-Time Data Acquisition Software (Seasave) screen. The reason for this is that a lot of people don’t have time to create fancy plots while at sea, so it’s helpful to know how to approach monitoring while watching the data come in. Follow the link here to download a one-page pdf that can be displayed next to your CTD acquisition computer.

BLOG POST #1 (August 2, 2021)

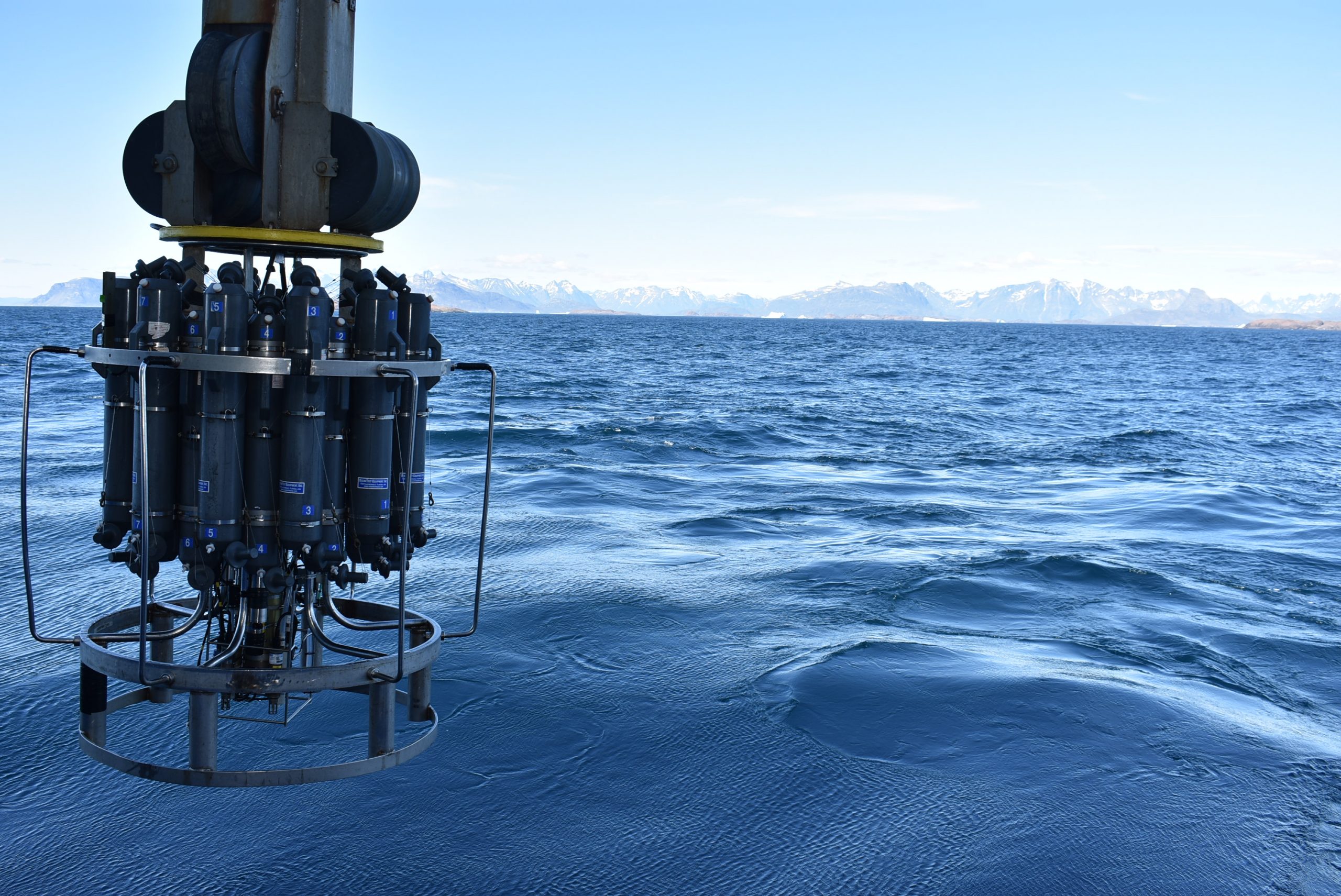

[caption id="attachment_21736" align="aligncenter" width="2560"] A CTD is performed near the coast of Greenland during one of the OSNAP 2020 cruises on R/V Armstrong. Photo: Isabela Le Bras©WHOI[/caption]

A CTD is performed near the coast of Greenland during one of the OSNAP 2020 cruises on R/V Armstrong. Photo: Isabela Le Bras©WHOI[/caption]

Hello folks and welcome to the Irminger 8 CTD blog! As the cruise progresses, tune in here for updates on Irminger 8 CTD data quality as well as tips on how best to approach using OOI CTD data. I plan to keep this information inclusive for folks with varying levels of experience with shipboard CTD data – from beginner to expert! If you have any questions about CTD data, feel free to send me an email (lmcraven@whoi.edu) and I’ll do my best to help. For this first post, I would like to summarize some important resources available to the community that will greatly help with CTD data acquisition and processing.

CTDs have been around for a while, which on the surface makes them a bit less interesting than many of the new exciting technologies used at sea. The fact remains that the CTD produces some of the most accurate and reliable measurements of our ocean’s physical, chemical, and biological parameters. Aside from being very useful on their own, CTD data serve as a standard by which researchers can compare and validate sensor performance from other platforms: gliders, floats, moorings, etc. Sensor comparison is particularly important for instruments that are deployed in the ocean for a long time (as is the case for OOI assets) as it is normal for sensors to drift due to environmental exposure and biological activity. As it turns out, CTD data provide a backbone for all OOI objectives.

[caption id="attachment_21739" align="alignleft" width="450"] Leah McRaven helps to deploy a CTD during one of the OSNAP 2020 cruises on R/V Armstrong. Photo: Astrid Pacini, MIT/WHOI Joint Program[/caption]

Leah McRaven helps to deploy a CTD during one of the OSNAP 2020 cruises on R/V Armstrong. Photo: Astrid Pacini, MIT/WHOI Joint Program[/caption]

However, just because CTDs have been performed for decades, we can’t always assume that that collection of quality data is straightforward. For example, one of the unique challenges of collecting CTD data near the OOI Irminger site and Greenland region is that there is an elevated level of biological activity throughout the year. While biological activity is exciting for many researchers, it can clog instrument plumbing, build up on sensors, and just be plain annoying to watch out for. CTDs utilized in the Irminger Sea are also subject to extreme conditions such as cold windchills and rough sea state (Cape Farewell is actually the windiest place on the ocean’s surface!), leading to the potential for accelerated sensor drift and the need to send sensors back to manufacturers for more regular servicing and calibration. As one can imagine, there are a lot of potential sources of error when simply considering the environment that OOI Irminger CTD data are collected in.

To help combat some of these potential sources of error, I’ll be picking apart CTD and bottle data cast by cast to look for evidence of CTD problems during the Irminger 8 cruise. But before we can talk about unique sources of CTD data errors, it’s helpful to remember errors that can become systematic throughout the entire data arc: from instrument care, to acquisition, to data processing, and to final data application. Improving our awareness of these issues will allow all CTD data users the opportunity for more meaningful data interpretation. So before I move forward, I thought it would be important to share some of my favorite resources available on community-recommended CTD practices. I encourage folks to comb through these resources and find what might be most appropriate for your respective research objectives.

Recommended CTD resources are provided here.

Read MoreNew Round of Improvements for Data Explorer

The OOI Data Team continues to listen to data users’ feedback to refine and improve Data Explorer. Many of those improvements are reflected in the latest release of Data Explorer, version 1.2, which is now operational. Data Explorer was originally released in September 2020, and this latest version is the second round of improvements made by Axiom Data Science, working with the OOI Data Team.

This version makes more OOI data accessible online and brings new features for gliders and profilers. It is also now possible to search for cruise data in the tabular search interface. Once there, you can select specific cruises, see their data profiles, and have a three-dimensional view of where the samples were taken. Glider data are also now available online and searchable by time and location. Once you’ve identified a glider of interest, it is possible to map or plot the glider’s route and compare data collected with data from other sensors. With another click, you can compare sensor data with profiler information, then change the parameters on the screen to learn more.

All data can be downloaded in csv, GeoJSON, KML, and ShapeFile formats for future use.

Additionally, discrete sample data (chemical analyses of seawater collected during shipboard verification sampling) have been added. Water samples are collected during OOI cruises at multiple depths, and analyzed for oxygen (Winkler), chlorophyll-a fluorescence and pigment distribution, nitrate/nitrite, and potentially a full nutrient suite, total DIC (dissolved inorganic carbon) and total alkalinity, pH, and salinity. These data can be used to compare to in situ instrument data or CTD casts in order to ensure OOI data quality. It is now possible to use Data Explorer 1.2 to convert discrete dissolved oxygen sample data from milliliters to micromoles and create standard_name mapping for discrete sample data.

In response to users’ feedback, many defects found in version 1.1 have been fixed. A summary of all the new features and bug fixes is available in the release notes.

“Data Explorer is a tool that allows users to access, manipulate, and understand OOI data for use in their research and classroom,” said Jeff Glatstein, OOI Data Delivery Lead and Senior Manager of Cyberinfrastructure. “Users’ feedback has been—and will continue to be— extremely useful in refining Data Explorer to ensure it meets users’ need and expectations. We are holding regular open meetings as one way to ensure that we receive timely feedback and work with our users to meet their needs.”

An open OOI Town Hall previewing some of the new and special glider-related features was held on 24 August 2021, where user input was welcomed. A recording of the session is available here.

Read MoreOOI Data Center Transferred to OSU

It’s official. As of Friday 30 July 2021, OOI data are now being stored and served on their new Cyberinfrastructure housed at the OOl Data Center at Oregon State University (OSU). The transfer of data from Rutgers, the State University of New Jersey, has been in the works since last October, when OSU was awarded the contract for the center.

“The transfer of 105 billion rows of data was nearly seamless, which attests to the collaboration between the OOI technical and data teams, said Jeffrey Glatstein, OOI Data Delivery Lead and Senior Manager of Cyberinfrastructure. “It literally took a village and we are grateful to the marine implementing organizations at the University of Washington, Woods Hole Oceanographic Institution, and Oregon State University for their part in making this transfer happen while data continue to be collected 24/7.”

OSU Project Manager Craig Risien added, “July 30, 2021 was the culmination of about ten months of working with our Dell EMC partners, the OOI Marine Implementing Organizations, and the OOI Cyberinfrastructure team to deliver a state-of-the-art and highly extensible data center to meet OOI’s present and future data handling needs. We are very pleased with how the data center migration project has proceeded, thus far.”

[feature][caption id="attachment_21888" align="aligncenter" width="519"] The primary repository of OOI raw data is the Dell EMC Isilon cluster, a scale out network-attached storage platform for storing high-volume, unstructured data. Photo: Craig Risien, OSU.[/caption]

The primary repository of OOI raw data is the Dell EMC Isilon cluster, a scale out network-attached storage platform for storing high-volume, unstructured data. Photo: Craig Risien, OSU.[/caption]

[/feature]

OSU’s Data Center was designed to handle telemetered, recovered, and streaming data for OOI’s five arrays that include more than 900 instruments. Telemetered data are delivered to the Data Center from moorings and gliders using remote access such as satellites. Recovered data are complete datasets that are retrieved and uploaded once an ocean observing platform is recovered from the field. Streaming data are delivered in real time directly from instruments deployed on the cabled array.

“The OSU Data Center includes modern storage solutions, Palo Alto next-generation firewalls that ensure system security, and a hyperconverged ‘virtual machine’ infrastructure that makes the OOI software and system easier to manage and more responsive to internal and external data delivery demands, “ explained OSU Principal Investigator Anthony Koppers. “With this equipment now operational at OSU, we are well positioned to seamlessly serve the more than 1 PB of critically important data collected by the OOI to the wide-ranging communities doing marine research and education with ample space to grow well into the future.”

Read MoreA Case Study for Open Data Collaboration

Recognizing that freely accessible ocean observatory data has the potential to democratize interdisciplinary science for early career researchers, Levine et al. (2020) set out to demonstrate this capability using the Ocean Observatories Initiative. Publicly available data from the OOI Pioneer Array moorings were used, and members of the OOI Early Career Scientist Community of Practice (OOI-ECS) collaborated in the study.

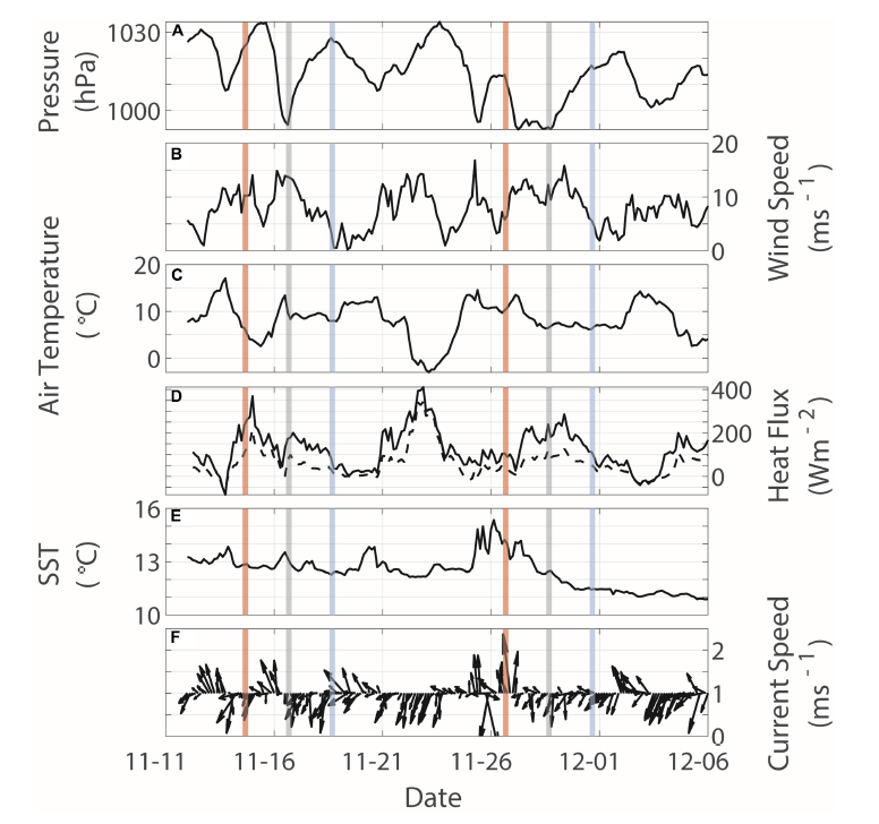

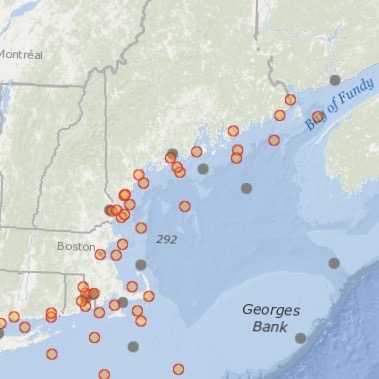

A case study was constructed to evaluate the impact of strong surface forcing events on surface and subsurface oceanographic conditions over the New England Shelf. Data from meteorological sensors on the Pioneer surface moorings, along with data from interdisciplinary sensors on the Pioneer profiler moorings, were used. Strong surface forcing was defined by anomalously low sea level pressure – less than three times the standard deviation of data from May 2015 – August 2018. Twenty-eight events were identified in the full record. Eight events in 2018 were selected for further analysis, and two of those were reported in the study (Figure 24).

[media-caption path="https://oceanobservatories.org/wp-content/uploads/2021/07/CGSN-Highlight.png" link="#"]Figure 24. Two surface forcing events (16 and 27 November) identified from the time series of surface forcing at the Pioneer Central surface mooring. Vertical lines indicate the peak of the anomalous low-pressure events (gray), as well as times 48 h before (red) and after (blue). (A) sea level pressure, (B) wind speed, (C) air temperature, (D) latent (solid) and sensible (dashed) heat fluxes, (E) sea surface temperature, and (F) surface current speed and direction. [/media-caption]The impact of surface forcing on subsurface conditions was evaluated using profile data near local noon on the day of the event, as well as 48 hr before and after (Figure 24). Subsurface data revealed a shallow (40-60 m) salinity intrusion prior to the 16 November event, which dissipated during the event, presumably by vertical mixing and concurrent with increases in dissolved oxygen and decreases in colored dissolved organic matter (CDOM). At the onset of the 27 November event, nearly constant temperature, salinity, dissolved oxygen and CDOM to depths of 60 m were seen, suggesting strong vertical mixing. Data from multiple moorings allowed the investigators to determine that the response to the first event was spatially variable, with indications of slope water of Gulf Stream origin impinging on the shelf. The response to the second event was more spatially-uniform, and was influenced by the advection of colder, fresher and more oxygenated water from the north.

The authors note that the case study shows the potential to address various interdisciplinary oceanographic processes, including across- and along- shelf dynamics, biochemical interactions, and air-sea interactions resulting from strong storms. They also note that long-term coastal datasets with multidisciplinary observations are relatively few, so that the Pioneer Array data allows hypothesis-driven research into topics such as the climatology of the shelfbreak region, seasonal variability of Gulf Stream meanders and warm-core rings, the influence of extreme events on shelf biogeochemical response, and the influence of a warming climate on shelf exchange.

In the context of the OOI-ECS, the authors note that the study was successfully completed using open-source data across institutional and geographic boundaries, within a resource-limited environment. Interpretation of results required multiple subject matter experts in different disciplines, and the OOI-ECS was seen as well-suited to “team science” using an integrative, collaborative and interdisciplinary approach.

______________________________________________________________________________________________

Levine, RM, KE Fogaren, JE Rudzin, CJ Russoniello, DC Soule, and JM Whitaker (2020) Open Data, Collaborative Working Platforms, and Interdisciplinary Collaboration: Building an Early Career Scientist Community of Practice to Leverage Ocean Observatories Initiative Data to Address Critical Questions in Marine Science. Front. Mar. Sci. 7:593512. doi: 10.3389/fmars.2020.593512.

Read MoreRecommended CTD Resources

Hydrographer Leah McRaven (PO WHOI) from the US OSNAP team provided the following CTD resources to help researchers and others better how she and the Irminger Sea Array team are working with the near real-time data being provided by CTD sampling from the R/V Neil Armstrong:

There are four main sources considered in this list:

- Seabird Electronics is one of the most commonly used manufacturers of shipboard CTD systems. Their CTDs allow for integration of instruments from several other manufactures.

- The Global Ocean Ship-Based Hydrographic Investigations Program (GO-SHIP) provides decadal resolution of the changes in inventories of heat, freshwater, carbon, oxygen, nutrients and transient tracers, with global measurements of the highest required accuracy to detect these changes. Their program has documented several methods and practices that are critical to high-accuracy hydrography, which are relevant to many CTD data users.

- The California Cooperative Oceanic Fisheries Investigations (CalCOFI) are a unique partnership of the California Department of Fish & Wildlife, NOAA Fisheries Service and Scripps Institution of Oceanography. CalCOFI conducts quarterly cruises off southern & central California, collecting a suite of hydrographic and biological data on station and underway. CalCOFI has made great effort to document methods that are helpful to those collecting hydrographic measurements near coastal regions.

- University-National Oceanographic Laboratory System (UNOLS) is an organization of 58 academic institutions and National Laboratories involved in oceanographic research and joined for the purpose of coordinating oceanographic ships’ schedules and research facilities.

Instrument care and use

Seabird training module on how sensor care and calibrations impact data: https://www.seabird.com/cms-portals/seabird_com/cms/documents/training/Module9_GettingHighestAccuracyData.pdf

Data acquisition and processing

Notes on CTD/O2 Data Acquisition and Processing Using Seabird Hardware and Software: https://www.go-ship.org/Manual/McTaggart_et_al_CTD.pdf

CalCOFI Seabird processing: https://calcofi.org/about-calcofi/methods/119-ctd-methods/330-ctd-data-processing-protocol.html

Seabird CTD processing training material: https://www.seabird.com/training-materials-download

Within this material, discussion on dynamic errors and how to address them in data processing: https://www.seabird.com/cms-portals/seabird_com/cms/documents/training/Module11_AdvancedDataProcessing.pdf

General overview documents and resources

GOSHIP hydrography manual: https://www.go-ship.org/HydroMan.html

CalCOFI CTD general practices: https://www.calcofi.org/references/methods/64-ctd-general-practices.html

UNOLS site https://www.unols.org/documents/water-column-sampling-and-instrumentation?page=2 )

Read More

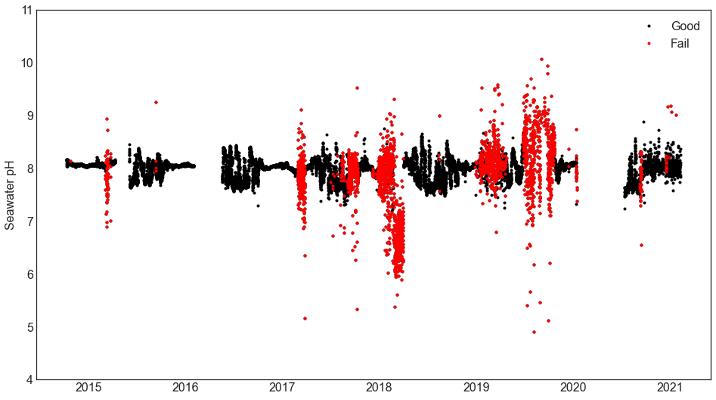

Jupyter Notebook Produces Quality Flags for pH Data

OOI uses the SAMI2-pH sensor from Sunburst Sensors, LLC to measure seawater pH throughout the different arrays. Assessing the data quality from this instrument is an involved process as there are multiple parameters produced by the instrument that are then used to calculate the seawater pH. These measurements are subject to different sources of error, and those errors can propagate through the calculations to create an erroneous seawater pH value. Based upon the vendor documentation and MATLAB code Sunburst provides to convert the raw measurements, OOI data team members have created a set of rules from those different measurements to flag the pH data as either pass, suspect or fail.

The resulting flags can be used to remove failed data from further analysis. They can also be used to help generate annotations for further Human in the Loop (HITL) QC checks of the data to help refine quality metrics for the data. OOI team member, Chris Wingard (OSU), has written up the QC process as a Python Jupyter notebook. This notebook and other example notebooks are freely available to the scientific community via the OOI GitHub site (within the OOI Data Team Python toolbox accessed from https://oceanobservatories.org/community-tools/ ).

In this notebook, Wingard shows how the quality rules can be used to remove bad pH data from a time series, and how they can be used to then create annotations. The impact of using these flags is shown with a set of before and after plots of the seawater pH as a function of temperature. The quality controlled data can then be used to estimate the seasonal cycle of pH to set climatological quality control flags.

Here an example is shown using data from a pH sensor on the Oregon Inshore Surface Mooring (CE01ISSM) near surface instrument frame (NSIF), deployed at 7 m depth (site depth is 25 m).

[media-caption path="https://oceanobservatories.org/wp-content/uploads/2021/07/EA-Highlight.png" link="#"]Figure 25: pH data from the Oregon Inshore Surface Mooring (CE01ISSM) near surface instrument frame (NSIF). Good data are shown in black, failed data in red. Note that simple range tests on the final calculated pH are often not enough to distinguish good from failed data. The automated QC processing examines intermediate measurements and fails data if intermediate measurements are outside acceptable ranges and propagated to final measurements.[/media-caption] [media-caption path="https://oceanobservatories.org/wp-content/uploads/2021/07/EA-highlight-2.png" link="#"]Figure 26: Good data together with annual cycles (red) constructed with available good data from initial deployment through 2021. Data which falls outside three standard deviations of the climatology is flagged as suspect. The climatological tests are used to flag suspect data. Simple range tests for suspect (cyan) and failed (magenta) data are also shown. The annual cycle at this site is strongly influenced by annual summer upwelling and winter storms and river plumes. The summer decrease in pH is consistent with cold, relatively acidic upwelled water high in CO2 (see e.g., Evans et al., 2011)[/media-caption]

Evans, W., B. Hales, and P. G. Strutton (2011), Seasonal cycle of surface ocean pCO2on the Oregon shelf,J. Geophys. Res., 116, C05012, doi:10.1029/2010JC006625.

Read MoreEasing Sharing of Glider Data

The OOI’s Coastal and Global Array teams regularly use Teledyne-Webb Slocum Gliders to collect ocean observations within and around the array moorings. The gliders fly up and down the water column from the surface down to a maximum depth of 1000 meters, collecting data such as dissolved oxygen concentrations, temperature, salinity, and other physical parameters to measure ocean conditions.

The OOI’s Coastal and Global Array teams regularly use Teledyne-Webb Slocum Gliders to collect ocean observations within and around the array moorings. The gliders fly up and down the water column from the surface down to a maximum depth of 1000 meters, collecting data such as dissolved oxygen concentrations, temperature, salinity, and other physical parameters to measure ocean conditions.

OOI shares its glider data with the Integrated Ocean Observing System (IOOS) Glider Data Assembly Center (DAC). IOOS serves as a national repository for glider data sets, serving as a centralized location for wide distribution and use. It allows researchers to access and analyze glider data sets using common tools regardless of the glider type or organization that deployed the glider.

OOI serves data to these repositories in two ways. When the gliders are in the water, data are telemetered, providing near real-time data to these platforms. Once the gliders are recovered, data are downloaded, metadata provided, and data are resubmitted to the Glider DAC as a permanent record.

The behind-the-scene process transmitting this huge amount of data is quite complex. OOI Data Team members, Collin Dobson of the Coastal and Global Scale Nodes at Woods Hole Oceanographic Institution (WHOI) and Stuart Pearce of the Coastal Endurance Array at Oregon State University (OSU) teamed up to streamline the process and catch up on a backlog of submission of recovered data.

Pearce took the lead in getting the OOI data into the DAC. In 2018, he began writing code for a system to transmit near real-time and recovered data. Once the scripts (processing code) were operational by about mid-2019, Pearce implemented them to streamline the flow of Endurance Array glider data into the DAC. Dobson then adopted the code and applied it to the transmission of glider data from the Pioneer, Station Papa, and Irminger Sea Arrays into the repository.

As it turned out, timing was optimum. “ I finished my code at the same time that the Glider DAC allowed higher resolution recovered datasets to be uploaded,” said Pearce. “So I was able to adjust my code to accommodate the upload of any scientific variable as long as it had a CF compliant standard name to go with it.” This opened up a whole range of data that could be transmitted in a consistent fashion to the DAC. CF refers to the “Climate and Forecast” metadata conventions that provide community accepted guidance for metadata variables and sets standards for designating time ranges and locations of data collection. Dobson gave an example of the name convention for density: Sea_water_density.

“Being CF compliant ensures your data have the required metadata and makes the data so much more usable across the board,” added Dobson. “If I wanted to include oxygen as a variable, for example, I have to make sure to use the CF standard name for dissolved oxygen and report the results in CF standard units.”

The Endurance Array team was the first group to add any of the non-CTD variables into the Glider DAC. This important step forward was recognized by the glider community, and was announced at a May 2019 workshop at Rutgers with 150 conveyors of glider data in attendance. One of Pearce’s gliders was used as the example of how and what could be achieved with the new code.

To help expedite the transfer of all gliders into the DAC, Pearce made his code open access. The additional metadata will help advance the work of storm forecasters, researchers, and others interested in improving understanding ocean processes.

Read More

Data Explorer v1.1. Launches

Since its inaugural launch in October 2020, OOI has been working with users of Data Explorer to learn what features worked for them, which could be improved, and what could be added to optimize users’ experiences. This input has been put into practice and is now available for further testing on Data Explorer v1.1.

Improvements made to this version include the addition of five new instrument data types: Wire-following, Surface-piercing, Cabled Deep and Shallow Profilers, and Cabled Single Point Velocity Meters. Changes were made to improve the display and use of ERDDAP data. Now it is possible to print custom configuration of time-series and data comparison charts.

A global search capability was added to allow users to use search terms to discover data sets in the Data Explorer. The search and navigation functions were tweaked to also find the data sets across all instruments and times. Other behind-the-scenes fixes were implemented to improve the site’s overall operability and functionality for users. The release notes can be viewed here.

“This version of Data Explorer incorporates suggestions from its growing community of users. We’re pleased to have received feedback that is serving to make Data Explorer a tool that meets users’ needs, which is our ultimate goal.” said Jeffrey Glatstein, OOI Data Delivery Lead and Senior Manager of Cyberinfrastructure.

A preview of the new features of Data Explorer v1.1 was held on 9 April 2021 and can be viewed below

[embed]https://youtu.be/WhXgQ5qe78E[/embed]:

Read MoreExpanding Reach of OOI Data

Pioneer Array data is now available on NERACOOS’ new Mariner’s Dashboard. This is but one example of how OOI data are integrated into other data repositories to maximize their benefit and use.[/caption]

Pioneer Array data is now available on NERACOOS’ new Mariner’s Dashboard. This is but one example of how OOI data are integrated into other data repositories to maximize their benefit and use.[/caption]

OOI shares data with partner repositories and institutions that host similar data but have different user bases. These partnerships expand the data available for forecasting models, help provide insight into current ocean conditions, and serve as important resources for many ranging from fishers and other maritime users to land-based researchers and students.

With the exception of the Station Papa Array, the OOI Coastal and Global Arrays maintain surface buoys. Instruments deployed on these buoys measure meteorological variables such as air temperature, barometric pressure, northward and eastward wind velocities, precipitation, solar radiation, and surface water properties of sea surface temperature and salinity. Other instruments on the moorings collect wave data, such as significant wave height, period, and direction. These data are then consumed by national and regional networks to improve accuracy of weather forecasting models.

The Regional Cabled Array (RCA) consists of fiber-optic cables off the Oregon coast that provide power, bandwidth, and communication to seafloor instrumentation and moorings with instrumented profiling capabilities. A diverse array of geophysical, chemical, and biological sensors, a high-definition camera, and digital still cameras on the seafloor and mooring platforms, provide real-time information on processes operating on and below the seafloor and throughout the water column, including recording of seafloor eruptions, methane plume emissions and climate change. These data are available for community use. Since 2015, the RCA has fed data into Incorporated Research Institutions for Seismology (IRIS), the primary source for data related to earthquakes and other seismic activity. In addition, data including zooplankton sonar data, are being utilized within the Pangeo ecosystem for community visualization and access and pressure data are incorporated into NOAA’s operational tsunami forecasting system.

Helping Improve Models and Forecasting

One of the recipients of OOI data is the National Data Buoy Center (NDBC), part of the National Oceanic and Atmospheric Administration’s (NOAA) National Weather Service. NDBC maintains a data repository and website, offering a range of standardized real-time and near real-time meteorological data. Data such as wind speed and direction, air and surface water temperature, and wave height and direction are made available to the broader oceanographic and meteorological community.

“Many researchers go to NDBC for their data, “said Craig Risien, a research associate with OOI’s Endurance Array and Cyberinfrastructure Teams, who helps researchers gain access to and use OOI data. “NBDC is a huge repository of data and it’s easy to access. So there’s a low barrier for researchers and students who are looking for information about wind speed, water temperature and a slew of other data. OOI contributing to this national repository significantly increases its data reach, allowing OOI data to be used by as many people as possible. “

OOI sea surface temperature data also make their way into the operational Global Real-Time Ocean Forecast System (RTOFS) at the National Centers for Environmental Prediction (NCEP), another part of NOAA’s National Weather Service. RTOFS ingests sea surface temperature and salinity data from all available buoys into the Global Telecommunications System (GTS). OOI glider data also are pushed in near real-time to the US Integrated Ocean Observing System Glider Data Assembly Center (DAC). From there, the data goes to the GTS where it can be used by the operational modeling centers such as NCEP and the European Centre for Medium-Range Weather Forecasts.

The GTS is like a giant vacuum sucking up near real-time observations from all sorts of different platforms deployed all over the world. On a typical day, the GTS ingests more than 7,600 data points from fixed buoys alone. As a result of this vast input, researchers can go to the GTS, pull available data, and assimilate that information into any model to improve its prediction accuracy.

Advancing Forecasting of Submarine Eruptions

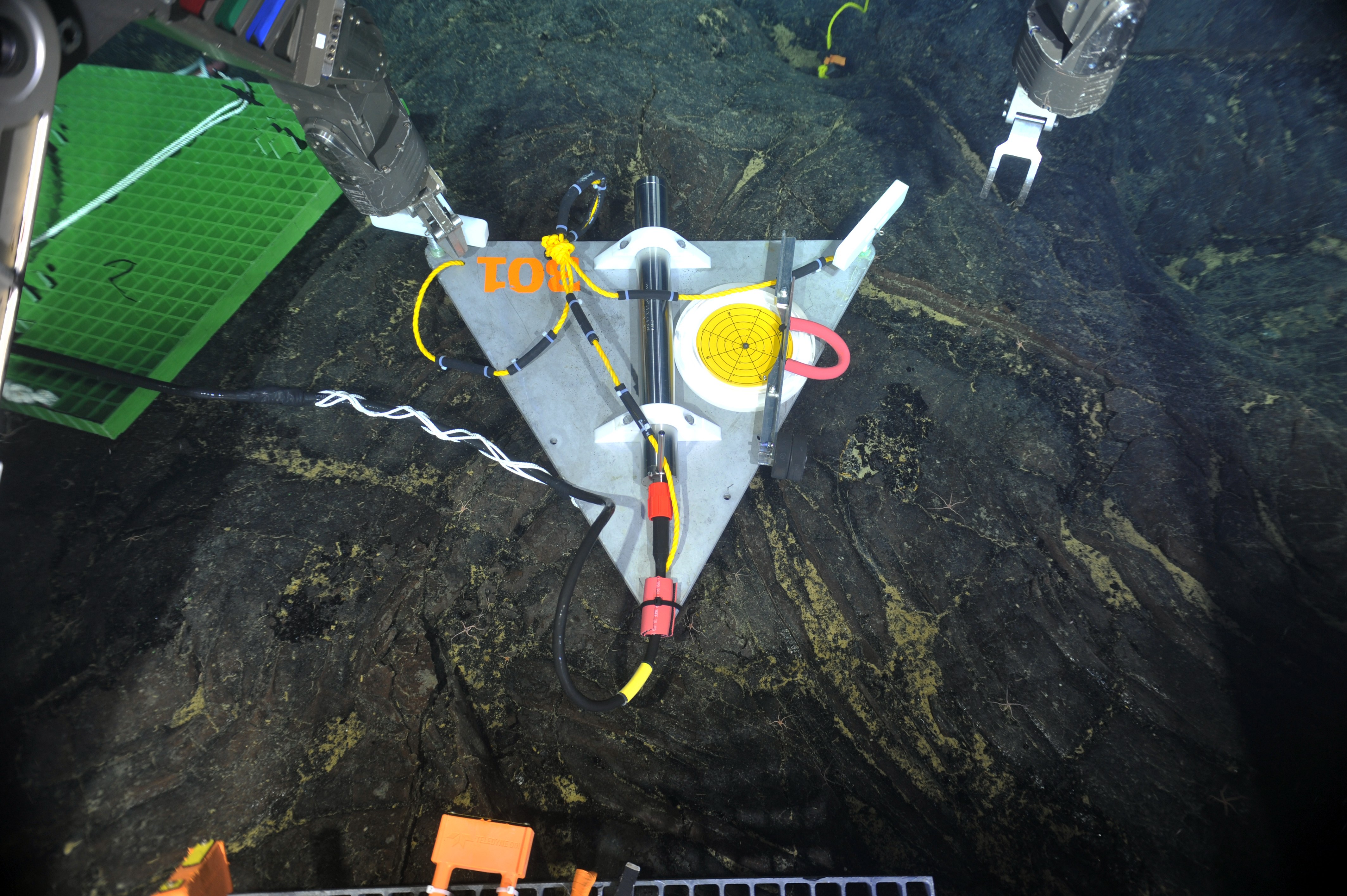

As the first U.S. ocean observatory to span a tectonic plate, RCA’s data are an invaluable contributor to IRIS’s collection. Since 2015, the user community has downloaded >20 Terabytes of RCA seismometer data from the IRIS repository. Fourteen different sampling locations include key sites at Axial Seamount on the Juan de Fuca mid-ocean ridge spreading center, near the toe of the Cascadia Margin and Southern Hydrate Ridge. RCA data are catalogued and available on the IRIS site, using the identifier “OO.”

[caption id="attachment_21046" align="alignleft" width="300"] Data from short period seismometers installed at RCA’s Axial Seamount and Southern Hydrate Ridge sites are streamed live to IRIS. Credit: UW/NSF-OOI/Canadian Scientific Submersible Facility, V13.[/caption]

Data from short period seismometers installed at RCA’s Axial Seamount and Southern Hydrate Ridge sites are streamed live to IRIS. Credit: UW/NSF-OOI/Canadian Scientific Submersible Facility, V13.[/caption]

“RCA is a critical community resource for seismic data. Axial Seamount, for example, which erupted in 1998, April 2011, was the site of more than 8,000 earthquakes over a 24-hour period April 24, 2015 marking the start of large eruption,” explained Deb Kelley, PI of the RCA. “Being able to witness and measure seismic activity in real time is providing scientists with invaluable insights into eruption process, which along with co-registered pressure measurements is making forecasting possible of when the next eruption may occur. We are pleased to share data from this volcanically and hydrothermally active seamount so researchers the world over can use it to better understand processes happening at mid ocean ridges and advance forecasting capabilities for the first time of when a submarine eruption may occur.”

Providing Data with Regional Implications

[caption id="attachment_21047" align="alignright" width="203"] Data from Endurance Array buoy 46100 are fed into WCOFS, where they are accessible to maritime users. Credit: OSU[/caption]

Data from Endurance Array buoy 46100 are fed into WCOFS, where they are accessible to maritime users. Credit: OSU[/caption]

OOI also provides data to regional ocean observing partners. Data from two Endurance Array buoys (46099 and 46100), for example, are fed into a four-dimensional U.S. West Coast Operational Forecast System (WCOFS), which serves the maritime user community. WCOFS generates water level, current, temperature and salinity nowcast and forecast fields four times per day. The Coastal Pioneer Array is within the future Northeastern Coast Operational Forecast System (NECOFS). Once operational, Pioneer’s observations will potentially be used for WCOFS data assimilation scenario experiments.

Coastal Endurance Array data are shared with the Northwest Association of Networked Ocean Observing Systems (NANOOS), which is part of IOOS, and the Global Ocean Acidification Observing Network (GOA-ON). Endurance data are ingested by the NANOOS Visualization System, which provides easy access to observations, forecasts, and data visualizations. Likewise, for GOA-ON, the Endurance Array provides observations useful for measuring ocean acidification.

Data from three of the Pioneer Array buoys also are part of the Mariners’ Dashboard, a new ocean information interface at the Northeastern Regional Association of Coastal Ocean Observing Systems (NERACOOS). Visitors can use the Dashboard to explore the latest conditions and forecasts from the Pioneer Inshore (44075), Central (44076), and Offshore (44077) mooring platforms, in addition to 30+ other observing platforms throughout the Northeast.

“We are working hard to distribute the OOI data widely through engagement with multiple partners, which together are helping inform science, improve weather and climate forecasts, and increase understanding of the ocean,” added Al Plueddemann, PI of the Coastal and Global Scale Nodes, which include the Pioneer, Station Papa, and Irminger Sea Arrays.

Read More

Pioneer Data Now on New Mariners’ Dashboard

Data from three of the Ocean Observing Initiative’s (OOI) Pioneer Array buoys are now part of the Mariners’ Dashboard, a new ocean information interface launched by our partners at NERACOOS (Northeastern Regional Association of Coastal Ocean Observing Systems). Visitors can use the Dashboard to explore the latest conditions and forecasts from the Pioneer Inshore, Central, and Offshore mooring platforms, in addition to 30+ other observing platforms throughout the Northeast.

The Mariner Dashboard delivers high-quality, timely data from a growing network of buoys and sensors into the hands of mariners heading to sea. The data provided by the Pioneer moorings are particularly valuable because there are few other observing platforms in the highly traveled and productive shelf break region.

Observations provided range from air pressure and temperature, sea surface temperature, wave height, direction, velocity, and duration to salinity. Check out the Pioneer Array’s contributions to the wealth of information on the new Mariners’ Dashboard here:

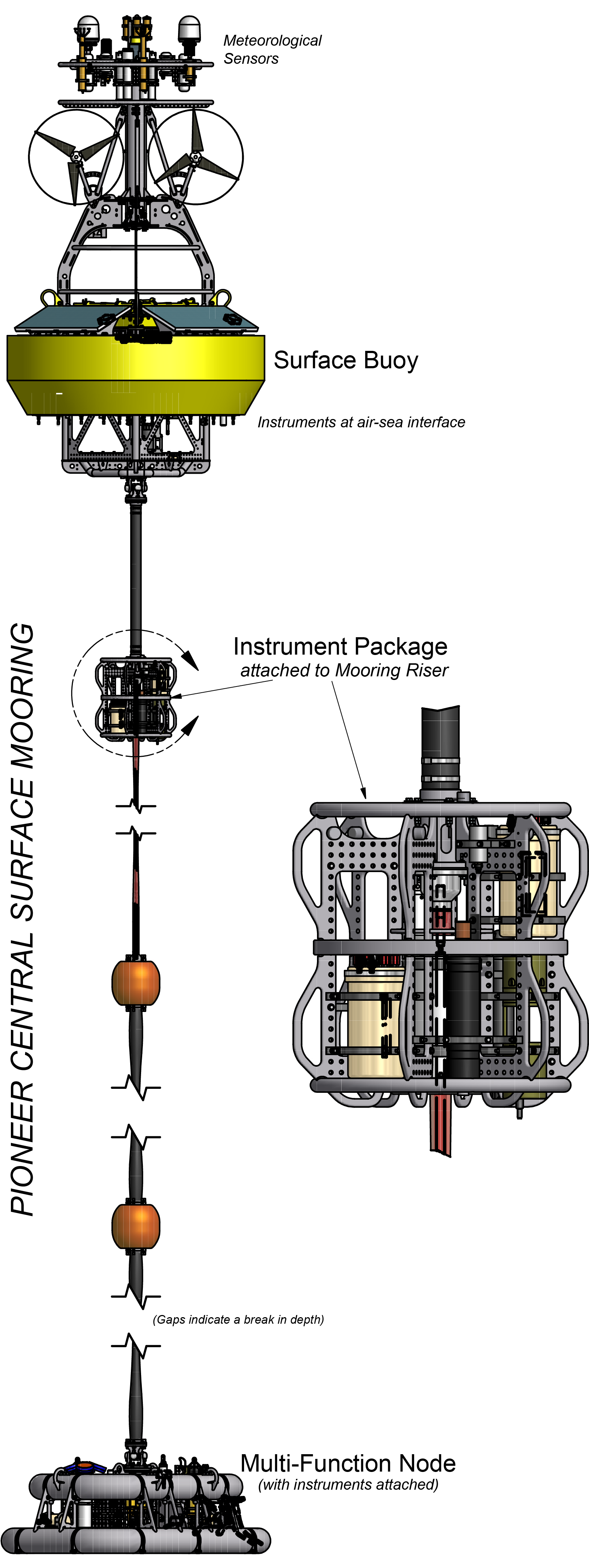

[caption id="attachment_20963" align="alignleft" width="113"] Components used to collect and transmit ocean observations to shore.[/caption]

Components used to collect and transmit ocean observations to shore.[/caption]

Pioneer offshore https://mariners.neracoos.org/platform/44077

Pioneer central: https://mariners.neracoos.org/platform/44076

Pioneer inshore: https://mariners.neracoos.org/platform/44075

“We are pleased to see the Pioneer Array data being made more widely available through the Mariners’ Dashboard to help provide information about current ocean conditions,” said Al Plueddemann, head of the Ocean Observatories Initiative Coastal and Global Surface Nodes team, which includes the Pioneer Array. “This is just one example of how OOI data, which are freely available to anyone with an Internet connection, are being put to good use.”

Read More